Security Tip: Restricting Local File Access

[Tip#33] We can easily restrict access to files on remote storage like S3, but what about local files?

It’s trivial to restrict access to files on an external file storage system like S3. Just set the right permissions on the bucket and use the Storage::temporaryUrl() method. However, this doesn’t work for local files. Laravel provides a Public Disk, which provides easy access - but does not restrict it in any way.

One option is to use randomly generated filenames, but that’s just security through obscurity, and has no real benefit if someone can index or list the files. It also doesn’t allow you to define an expiry time. So it doesn’t really do the job.

To solve this problem, we need to use a bit of creativity and a couple of Laravel’s helpers.

Firstly, store the files outside the public/, so they aren’t directly accessible. A subfolder inside storage/ is usually a good spot.

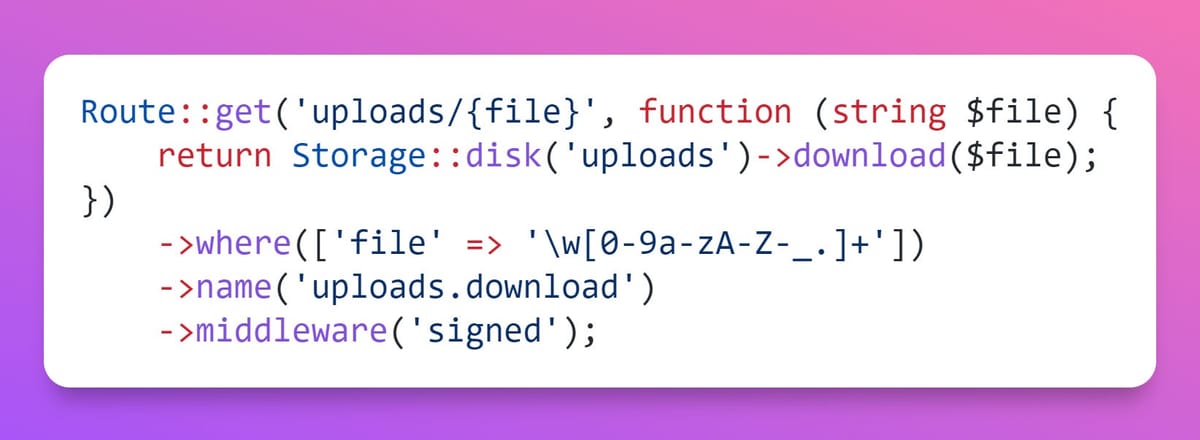

Secondly, create a new route to serve the files from, adding the filename as the final route parameter, and add the signed middleware to this route. (I’m sure you can see where this is going!)

Route::get('uploads/{file}', function (string $file) {

return Storage::disk('uploads')->download($file);

})

->where(['file' => '\w[0-9a-zA-Z-_.]+'])

->name('uploads.download')

->middleware('signed');Finally, use the URL::signedRoute() and URL::temporarySignedRoute() helpers to generate a secure route to the file.

The signed route passthrough will ensure that only URLs generated by your app can access the files, matching the functionality of the Storage::temporaryUrl() S3 method.

Have you solved this problem a different way? Leave a comment and share your solution!

Notes:

- The example above will initiate a download of the file, so you’ll need to tweak it and possibly add some headers if you want to deal with images or other non-direct-download files. You could base this on requested extension, or have a different route. Whatever works for your application.

- There will be a performance hit on these requests, given they’ll need to boot up Laravel, rather than serve the file directly. If you need high performance handling, then local file storage probably isn’t what you’re looking for.

- Any time you’re dealing with signed requests, don’t forget to be careful of caching. Over-eager caching could result in the files being cached with the signature ignored - allowing directly access that bypasses the signature.

If you found this security tip useful, subscribe to get weekly Security Tips straight to your inbox. Upgrade to a premium subscription for exclusive monthly In Depth articles, or drop a coin in the tip jar to show your support.

When was the last time you had a penetration test? Book a Laravel Security Audit and Penetration Test, or a budget-friendly Security Review!

You can also connect with me on Bluesky, or other socials, and check out Practical Laravel Security, my interactive course designed to boost your Laravel security skills.